I used a magnetometer IC at a previous job and was amazed at how poorly it worked as a compass. As it turns out, this is not the fault of the magnetometer (they do have their quirks), but rather that the environment is surprisingly magnetively active compared to the background field of the Earth. Electric currents from USB cables are nearby on the desk, rebar in concrete walls and the like, all significantly distort the local field.

I like sensing technology, and was interesting in making a scanner using one of these magnetomers. I do not think this is useful except for pedagogy, but there maybe could be some interest CAT scans formed (reconstruction would suffer from the inverse problem that EEG and EMG have).

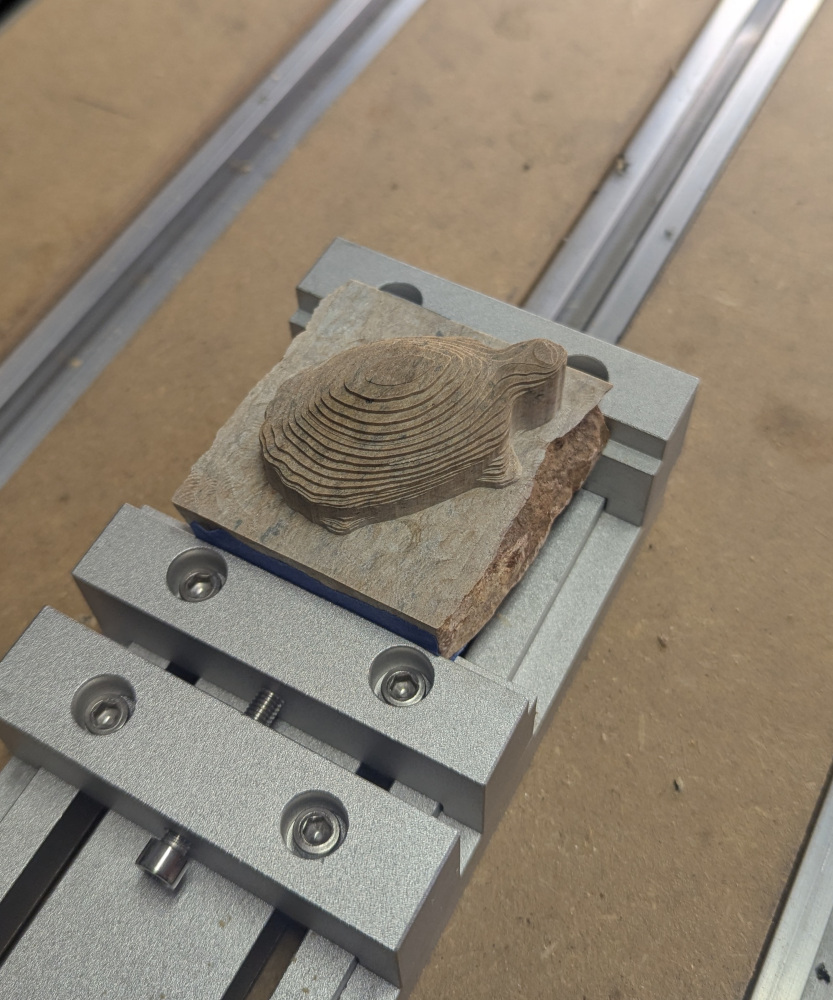

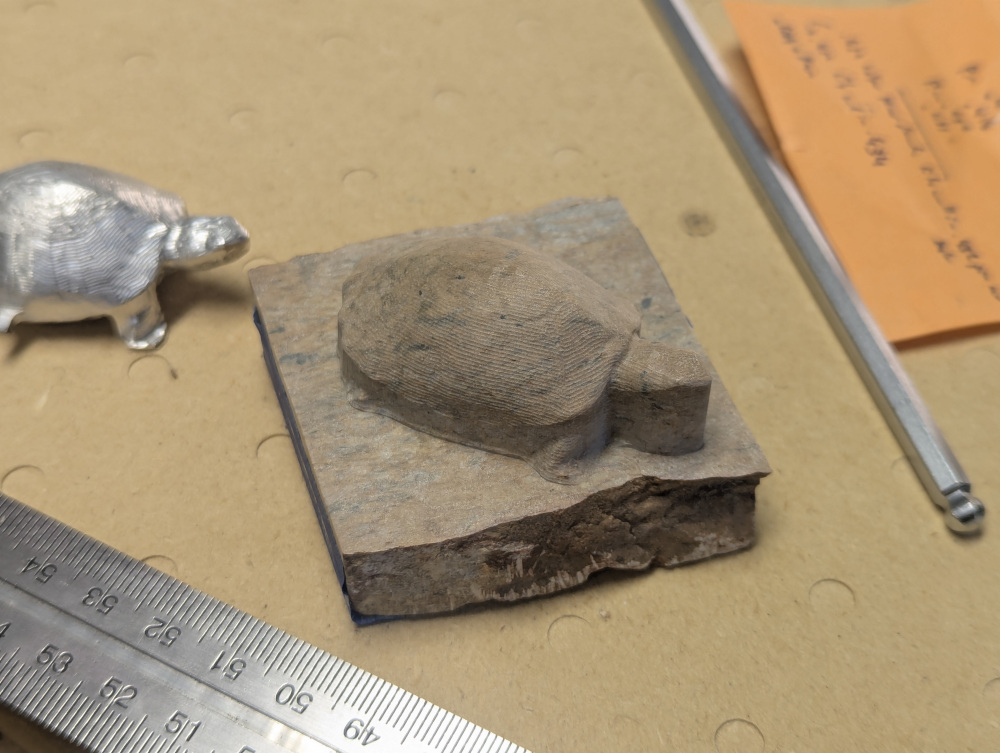

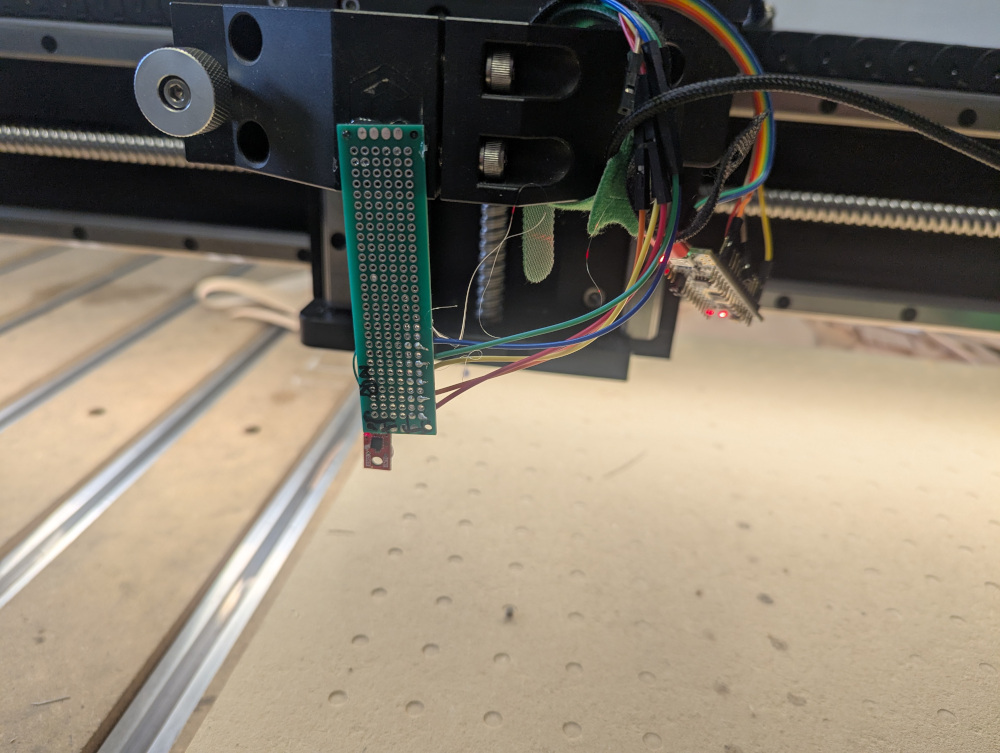

For the setup, I use a sparkfun magnetometer breakout board (MMC5983), hooked up to a regularly polling nucleo board that reported the data back to a computer over serial. Mounting it onto the Shapeoko allowed me to get it to a decent distance from motors and allowed me control the position with gcode.

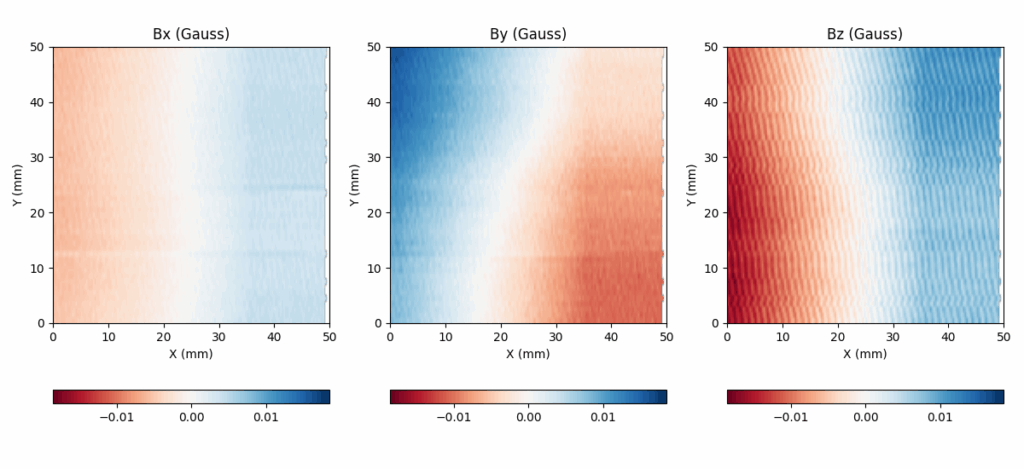

As this is a quick project, I assigned the points to specific locations just based off of time in the scanned motion, and only calibrated by subtracting a background scan reading and applying the coordinate transforms as if the chip was exactly aligned with the machine coordinate systems.

I imaged a cell phone and could see the fields of (presumably) speaker magnets at the top and bottom.

Field lines are visualized by integrating the motion of randomly placed test particles along the measured direction of the magnetic fields. I made these plots for a pair of small magnets, one vertically oriented and the horizontally.

I would like to redo this with a new board that has magnetic excitation coils (so the Earth’s field isn’t nessesary for imaging non-magnetized materials) and a design so the magnetometer could be moved almost flush with the sample to be images. I’m pretty sure one could image, if not inspect ENIG PCBs with this sort of technique.